The Computer Can Change People’s Voices. Why Is It Dangerous?

The introduction of the first reasonably capable black-and-white-screen smartphones and PCs in the early 2000s actually astonished many. Virtual reality, smartphones with constant Internet connectivity, and other modern technologies are no longer even shocking to many people. However, neural networks may also be incredibly amusing at times. Today, they are capable of changing a person’s voice in real-time in addition to drawing pictures based on keywords. Voicemod recently unveiled a system that enables users to speak in the voice of an astronaut, a robot, or actor Morgan Freeman. Not far from now, clients can use the new functionality in any program, including video games and messengers’ chats. So you could pull out amusing pranks on your buddies, which would seem remarkable. But such a cool tech has one major disadvantage. Follow me to know what it is.

Voice Changer Program

The same-named computer program, created by the Spanish business Voicemod, enables you to alter your voice in real time. You can use it to speak with the voice of a woman, a guy, a zombie, an alien, etc., by setting it up to operate with communication-based games or applications. You must pay for it to access all of the sound lists, although, for some people, the list from the free plan is enough.

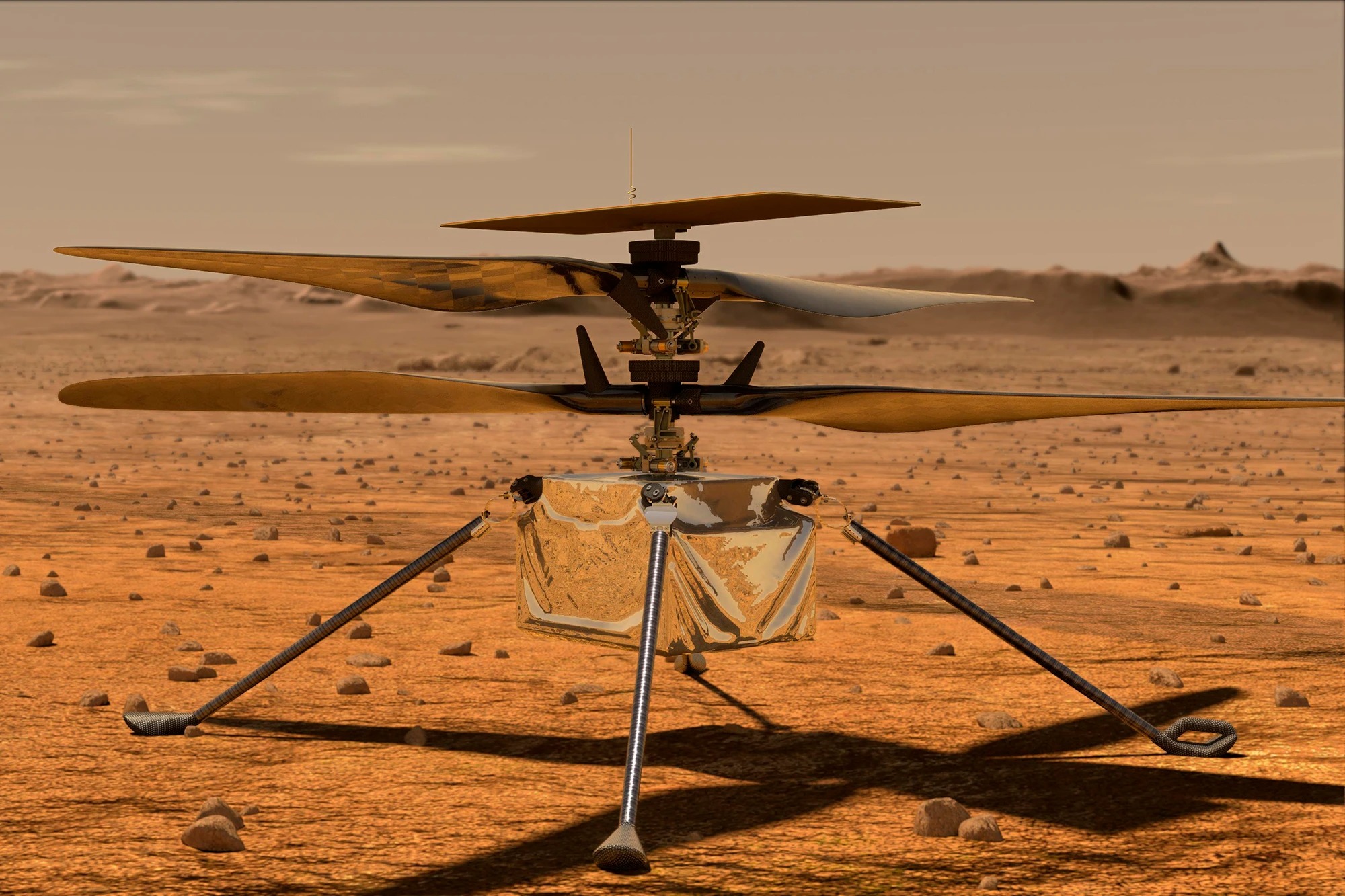

The news that a business has created new AI Voices technology that enables you to speak with the voice of actor Morgan Freeman, an astronaut, the HAL-9000 computer from Arthur C. Clarke’s A Space Odyssey, and others has recently been trendy on the Internet. A machine learning system changes a person’s voice into another, and the data is processed on the user’s computer rather than in the cloud.

Currently, the technology is only available to a limited number of people. But anyone can apply for beta testing on the company’s website – they promise to give access to the updated program in the coming weeks. The Voicemod website says that the utility does not load the processor and can work even on cheap computers. How much the new AI Voices loads the computer is unknown – there are no warnings, meaning it should work well.

Fraud Technologies

But what is the danger of this technology? By itself, the program for turning a person’s voice into the voice of a famous actor is harmless. However, the very fact that even a weak computer is capable of this is alarming. After all, there are a vast number of world craftsmen who can also develop similar technology and use it not with the best intentions. For example, change your voice, introduce yourself as a bank employee, and swindle money from people who are too gullible.

An algorithm that can make an audio file by duplicating a person’s voice from another recording was created in 2019 by a group of programmers. It turns out that two audio files are required for the technology to function: one contains an example of a voice that needs to be replicated, and the other includes a phrase that needs to be generated using this voice. After inputting two files, the program will instantly change the voice from the second recording to sound like the first. The potentially harmful technology for human safety actually already exists. Perhaps there are many superior solutions out there that we are simply unaware of.

It is already a fact that some scammers can call to record a person’s voice. Now imagine that this recording was loaded into the program mentioned above, and the attackers created an audio file requesting to transfer money to the card. And using this record, they may well call relatives and friends of the person from whose voice the “cast” was taken. The probability of such fraud is much higher than when obvious scammers call the phone and pretend to be bank employees.

Modern Bank Robbery

It can appear to be only paranoia. But there have already been examples of this, and the case at hand is a significant bank robbery. One of the administration employees of a credit institution in the United Arab Emirates was negotiating with a businessman who was about to close a critical deal in 2021. To do this, the manager had to transfer $35 million to the client’s account. When the transaction was finished, it was discovered that the money had been sent to the robbers’ account, and the organization’s employee had spoken to the client using his voice clone rather than his actual voice. Around 17 people, according to Forbes, were involved in the crime.

For premium readers