How Machines Are Taking Over Art

Over the past year, there has been a breakthrough in the development of publicly accessible visual content systems based on machine learning technologies. Such AI generators produce high-quality images suitable for advertising, publishing, design, and, as the technology progresses, even art objects. But, will this lead to the replacement of artists and designers by algorithms? Let’s find out!

Dehumanized Effort

Chinese contemporary artist Ai Weiwei is one of the art world’s iconic figures. He topped the rating of the most influential people in the art world according to ArtReview magazine in 2011; his works have been exhibited many times at the most prestigious galleries and major exhibitions (Tate Modern in London, Documenta in Kassel, etc.). Yet, the artist has often remarked ironically that his work is an idea that doesn’t require recognizing the people behind it.

Indeed, if your art piece consists of 100,000,000 (no extra zeroes here!) hand-painted porcelain sunflower seeds scattered in a thick layer on the floor of the largest hall in the Tate Modern in London, one cannot finish it without the 1,600 workers who spent several years making these seeds. Incidentally, Ai Weiwei is not the first to produce art by adapting someone’s anonymous effort: Marcel Duchamp’s famous Fountain (actually a urinal from a store) created at the beginning of the previous century led to the appearance of an entire art movement with the clever name of ready-made.

Soulless Creator

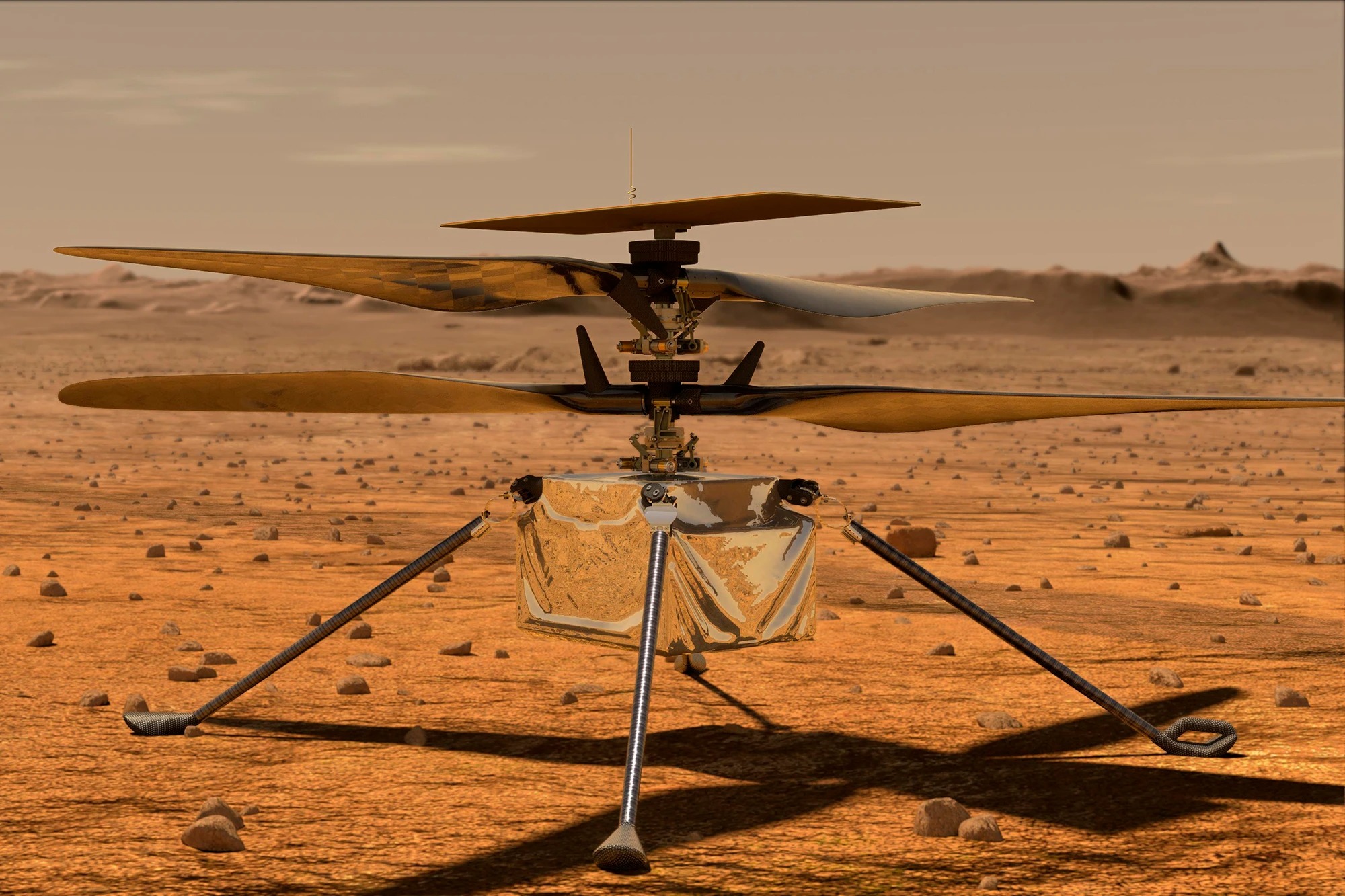

This historical reference above is useful for understanding what is happening right now in arts – especially in the last few months. The powerful generative neural networks that appeared not so long ago are actively utilized worldwide. They are capable of creating a large, complex, and, if the human controlling the program wants so, very detailed image from just a short verbal description.

The DALL-E 2 AI system, created by OpenAI specialists in 2022, is best known owing to a huge number of publications. Even its previous version, launched a year ago, caused a lot of concern: DALL-E created quite photorealistic images of events that had never happened. Of course, Photoshop has been around for a long time and has become a household name, but working with a generative network dramatically lowers the barrier to entry for creating fakes. To get a quality result with DALL-E, you don’t have to spend years honing your skills and hours fiddling with the image to get the desired result. It is why DALL-E was never fully accessible to the general public and why numerous restrictions were built into DALL-E 2 from the get-go (not allowing the use of images of real politicians or limiting sensitive and graphic descriptions in the text to generate the image).

Entering the Mainstream

A couple of months ago, the cover of Cosmopolitan generated with DALL-E 2 caused some anxiety among designers – it was announced that it took the algorithm 20 seconds to create it. Technically it is true – the final picture generation took precisely this amount of time. Still, finding the right idea and composition and further improvement of the picture took many hours of real designer’s effort (however, it was her first experience with the generative network, so the learning speed is also impressive).

Understandably, the art community was terrified, and some went as far as to consider DALL-E 2 an existential threat. Unlike the early works of generative networks, which looked like some abstract digital fantasy, we are now talking about high-quality custom-made commercial graphics.

However, the more confident creators were rather happy than apprehensive – they were given a new tool, and its possibilities, combined with the traditional ones, are mesmerizing. Artists like Ai Weiwei are sure that this novelty will help bring to life the images born in thousands of bright minds that lacked the means of self-expression.

Neural Frontier

DALL-E 2 is not unique: Imagen by Google is a similar tool, though less hyped and therefore not as widely known. Its creators say that during blind tests, people liked the pictures generated by Imagen more, but this is a matter of taste: what’s more important is that identical results were achieved with different approaches.

Another hot debate this summer is focused on a new project called Midjourney. It stirred up the global community of media artists, offering almost the same service as DALL-E or Imagen but emphasizing artistry and fantasy imagery with industry-grade picture quality.

In a recently published interview with David Goltz, the CEO of the company behind Midjourney called his product ‘an imagination engine’. What’s most peculiar about Midjourney is the number of its employees: the new service, which has won the love of many artists and amateurs worldwide, is created and maintained by a team of 10 people. The very existence of such a startup demonstrates the level of maturity and availability of technology, which allows the creation of generative graphics of almost professional quality.

The Future Is Now

While the Cosmopolitan cover doesn’t indicate a radical shift, Midjourney may become the driver of a new tendency when neural network-generated images are commonly used in a wide range of fields, from media art to advertising and publishing. People in the industry will have to learn quickly – a tool too powerful and versatile to ignore has appeared on the market. For us, the onlookers, the most thrilling phase begins as Apple has just announced a whole 3D scene generator!

For premium readers